Matrix product states and Tucker decomposition

2019-11-15By representing a quantum state in a product basis , one obtains information about the state in the form of a rank- tensor:

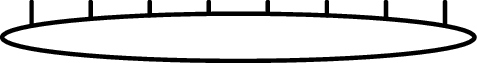

Here's what looks like in dead bug form for sites:

If each local basis has dimension (corresponding to a single site, spin, orbital, mode, or whatever else you'd like), then this tensor has elements in total.

Because exponential growth of this straightforward approach prevents the study of large systems, attempts have been made to reduce the total number of elements by decomposing into several smaller tensors.

If each local basis has dimension (corresponding to a single site, spin, orbital, mode, or whatever else you'd like), then this tensor has elements in total.

Because exponential growth of this straightforward approach prevents the study of large systems, attempts have been made to reduce the total number of elements by decomposing into several smaller tensors.

Two particularly successful examples of this are:

- matrix product states (MPSs), which are used in the density matrix renormalization group (DMRG), and which I've discussed before; and

- Tucker decomposition (TD), which appears in multiconfiguration time-dependent Hartree (MCTDH).

My goal in the following is to briefly demonstrate the surface differences between MPSs and TD (as well as the latter's hierarchical counterpart, HTD), with the aid of illustrations to elucidate the mathematics.

Matrix product states

The general expression for a matrix product state is or where the number of terms in each sum over is known as the bond dimension. Exploiting the repeated matrix multiplication structure, we may also write which is the form that gives this decomposition its name.

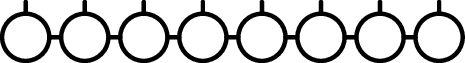

This may be expressed in the concrete case of sites as

The vertical lines are the physical indices (each running from 1 to ), while the horizontal lines are the bond indices (from 1 to ).

The tensor contractions (sums over ) are indicated by linking the indices.

It is important to point out that this is an inherently one-dimensional decomposition: each tensor is linked exclusively to adjacent tensors.

It's therefore no surprise that MPSs appear in the heart of DMRG, which has been used extensively for finding ground states of one-dimensional systems.

The vertical lines are the physical indices (each running from 1 to ), while the horizontal lines are the bond indices (from 1 to ).

The tensor contractions (sums over ) are indicated by linking the indices.

It is important to point out that this is an inherently one-dimensional decomposition: each tensor is linked exclusively to adjacent tensors.

It's therefore no surprise that MPSs appear in the heart of DMRG, which has been used extensively for finding ground states of one-dimensional systems.

The number of elements in this construct scales as ; if is constant, this is a drastic improvement over ! In fact, so long as is less than exponential in (which is the typical situation for one-dimensional systems), we should still gain from this decomposition when is sufficiently large.

Starting from a full tensor , it's possible to obtain the MPS form by repeated singular value decomposition (SVD). This is not commonly done in practice, but it can be used to prove that this decomposition is exact () for some that's big enough. The SVD is used on smaller tensors in DMRG in order to control the size of the MPS by keeping only the largest singular values.

Tucker decomposition

Tucker decomposition of a tensor has the form or where is the rank- core tensor, and each is a rectangular matrix. Because each of the matrices shares an index with the core tensor, there is no linear-algebraic shorthand notation.

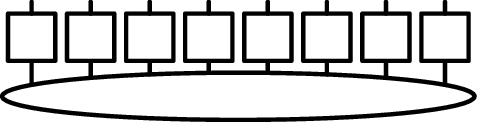

For sites, we have

In MCTDH terminology, the squares are the single-particle functions (SPFs), which project from the full -dimensional one-site space spanned by the primitive basis to a relevant subspace of dimension .

It's obvious that the exact tensor may be recovered () when and each SPF is the identity operator.

In MCTDH terminology, the squares are the single-particle functions (SPFs), which project from the full -dimensional one-site space spanned by the primitive basis to a relevant subspace of dimension .

It's obvious that the exact tensor may be recovered () when and each SPF is the identity operator.

The number of elements scales as , growing exponentially with . However, the hope is that can be much smaller than , quelling the exponential growth a little bit. For many strictly one-dimensional systems, one doesn't expect this to be more compact than the MPS form (at least asymptotically). On the other hand, in the absence of any natural ordering to the sites (such as in two-dimensional systems or for vibrational modes), the bond dimension of an MPS could grow very rapidly with , causing Tucker decomposition to fare much better in comparison.

In principle, Tucker decomposition of a tensor can be obtained via higher-order singular value decomposition (HOSVD). Though that's a bit of a misnomer, because the "core tensor" in a regular (matrix) SVD is the diagonal matrix of singular values, but the corresponding piece of the HOSVD is not diagonal in any sense.

Tucker decomposition with mode combination

In an effort to reduce the rank of the core tensor in Tucker decomposition, sites (modes) are sometimes combined together. This can be very beneficial for sites that are correlated and only live on a small subspace of their product space. This isn't fundamentally a different decomposition from plain TD, because it amounts to a relabelling of the primitive basis states, but it's still useful to take a peek at how it looks and feels.

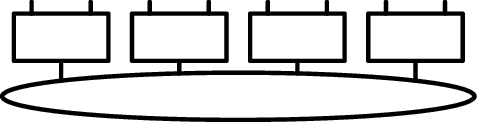

The picture that we get for when we group each pair of sites is

Now the core tensor is only a rank- tensor!

Of course, if each mode combination is a trivial one (a direct product index composed from the primitive indices), that's only an illusion and the core tensor simply reverts to itself.

Now the core tensor is only a rank- tensor!

Of course, if each mode combination is a trivial one (a direct product index composed from the primitive indices), that's only an illusion and the core tensor simply reverts to itself.

Counting the number of elements begins to get tricky, because there is now freedom in the structure of the decomposition. If we assume a homogeneous grouping of SPFs, each combining sites, we find that the number of elements scales as , which is largely reminiscent of the uncombined version. The trade-off here is a larger exponent in the second term in exchange for a reduction of the exponent in the first term.

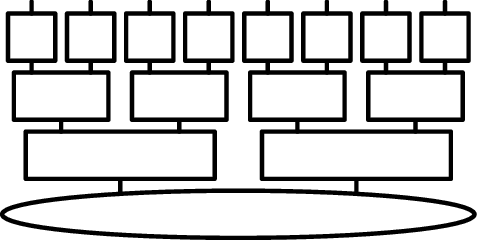

Hierarchical Tucker decomposition

A natural extension of the mode combination idea is to make it recursive.

At this point, the index wrangling gets quite unpleasant, especially if we want to be comprehensive, and it's quite frankly unnecessary for our purposes.

Instead, we'll try to remain comprehensible and just draw an example of hierarchical Tucker decomposition (HTD) for , where we've paired up two indices at each layer of the hierarchy, making a perfect binary tree:

More generally, HTD may include tensors all sorts of shapes and sizes, and choosing how to structure it is not a trivial endeavour. In 2003, Wang and Thoss showed how to apply the usual MCTDH algorithms recursively to such a tree structure of tensors in multilayer multiconfiguration time-dependent Hartree (ML-MCTDH).

To count the elements, we'll assume a perfect binary tree shape, which will have tensors spread across layers. This implies a certain pattern in the problem (imagine splitting a two-dimensional lattice in half recursively), which will not always be present, so this isn't the most general form, but it's easy to analyze. If the interior indices have dimension , we find that the total number of elements is . As long as is less than exponential in , we have no exponential terms!

Summary

Matrix product states (used in DMRG) and Tucker decompositions of all flavours (used in MCTDH) have some features in common: they are more compact than the full tensor that they approximate, and they can be made arbitrarily exact. (As promised, this was only a shallow inspection!) However, they also have some qualitative differences, and I hope that these are evident in the above diagrams.

The most important disparity is the presence of a core tensor in TD, compared to the decentralized nature of MPSs. The latter exploit the natural ordering of a one-dimensional system, while the former may also be freely applied to other geometries.

Plain Tucker decomposition and TD with mode combination will still have an exponentially-growing number of elements as one grows the system, while a matrix product state (when applied in an appropriate situation) will not. Hierarchical Tucker decomposition is also capable of sub-exponential scaling, and, unlike an MPS, it is not inherently limited to one-dimensional systems. Here are some scaling formulas all in one table:

| Decomposition | Number of elements |

|---|---|

| MPS | |

| TD | |

| TD with combined SPFs | |

| Perfect binary HTD |