Sampling from a different Gaussian

2017-07-14One way to evaluate integral ratios of the form

⟨f⟩ϱ=∫dxϱ(x)∫dxϱ(x)f(x)

is using Monte Carlo integration.

If ϱ(x) is proportional to the pdf of a Gaussian distribution,

ϱ(x)∝exp(−2σ2(x−μ)2),

then sampling is very easy, with many languages and environments offering a function for rejection-free sampling (e.g. Julia's randn).

For example, we choose the function

f(x)=x2

and a Gaussian distribution ϱ(x) with mean μ=2.5 and standard deviation σ=1.5.

From the definition of the variance (σ2=⟨x2⟩−μ2), we see that

⟨f⟩ϱ=μ2+σ2=8.5.

If we perform the average

f¯=N1i=1∑Nf(xi)

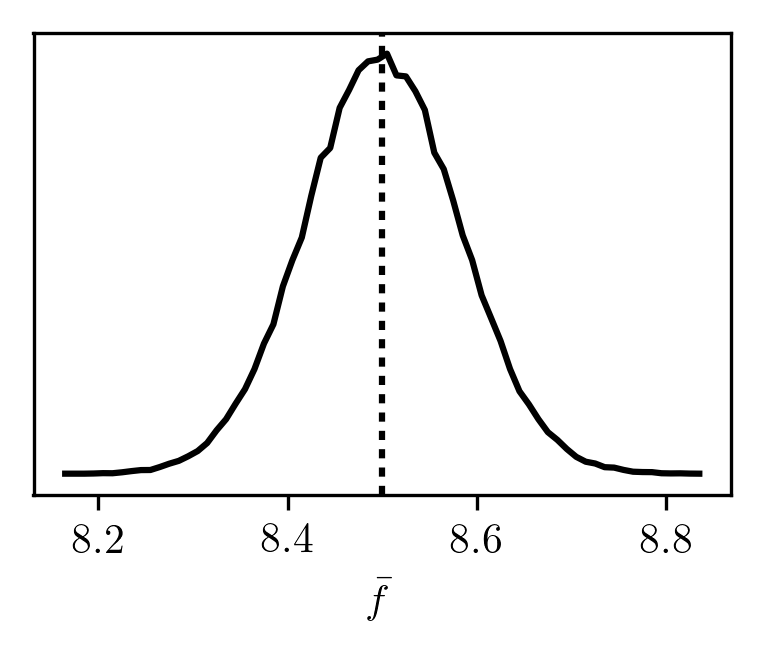

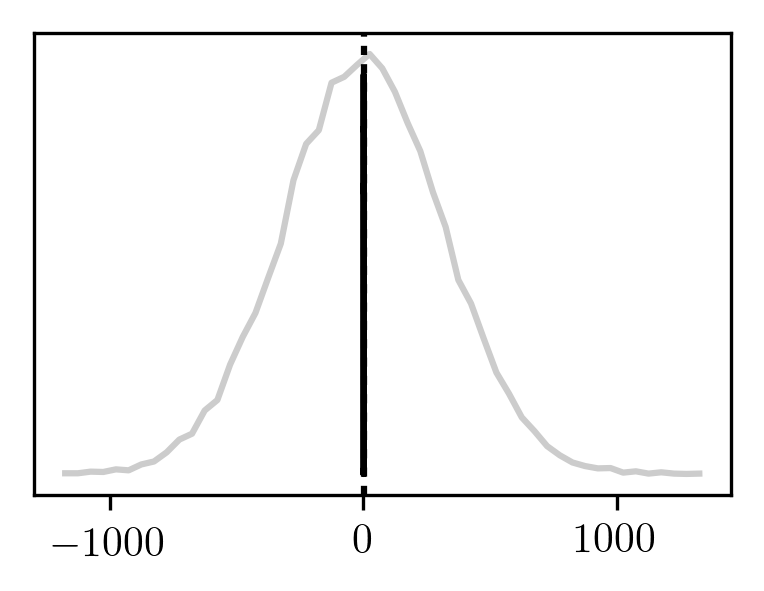

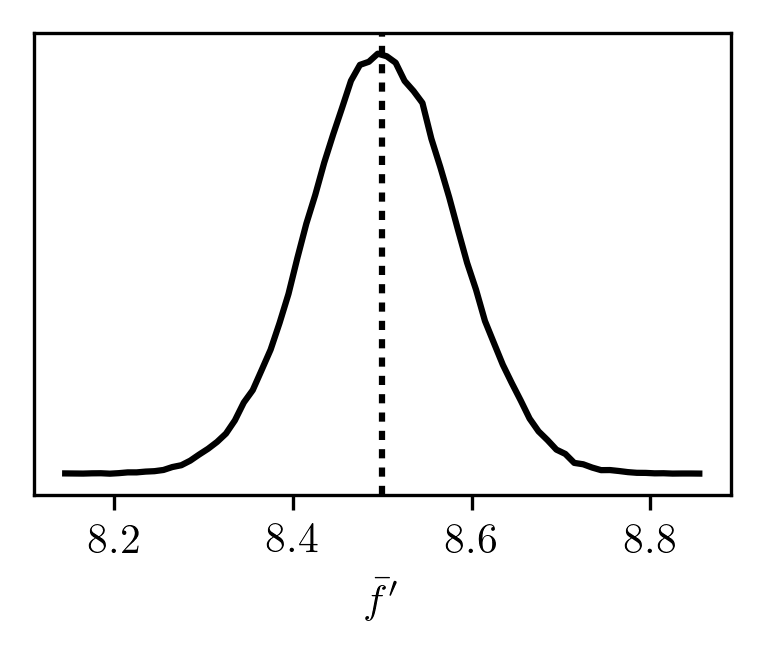

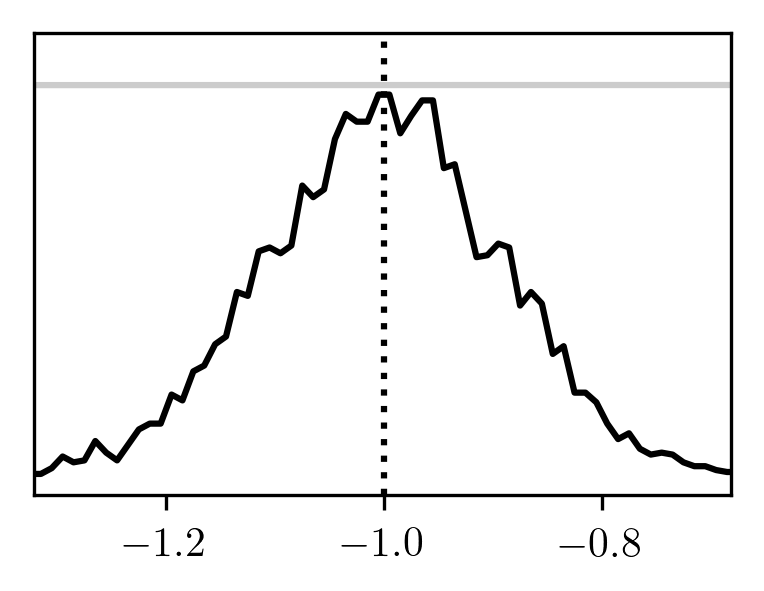

for N=104 samples and bin the results over many trials, the distribution looks like

Single Gaussian

Suppose that we want to evaluate the same integral ratio as before, but by sampling from a different Gaussian distribution ϱ′(x′) with mean μ′=−1.2 and standard deviation σ′=2.8.

We introduce

x′(x)=σσ′(x−μ)+μ′,

so that

ϱ(x)∝ϱ′(x′(x)).

This gives us

⟨f⟩ϱ=∫dxϱ′(x′(x))∫dxϱ′(x′(x))f(x),

to which we apply a change of variables from x to x′, yielding

⟨f⟩ϱ=∫dx′ϱ′(x′)∫dx′ϱ′(x′)f′(x′),

where

f′(x′)=f(x(x′))=f(σ′σ(x′−μ′)+μ).

We don't need to worry about

dx′dx=σ′σ,

because it would appear in both the numerator and denominator.

Hence, we see that

⟨f⟩ϱ=⟨f′⟩ϱ′.

We may verify that this is the case by sampling from ϱ′ and evaluating

f¯′=N1i=1∑Nf(σ′σ(xi′−μ′)+μ).

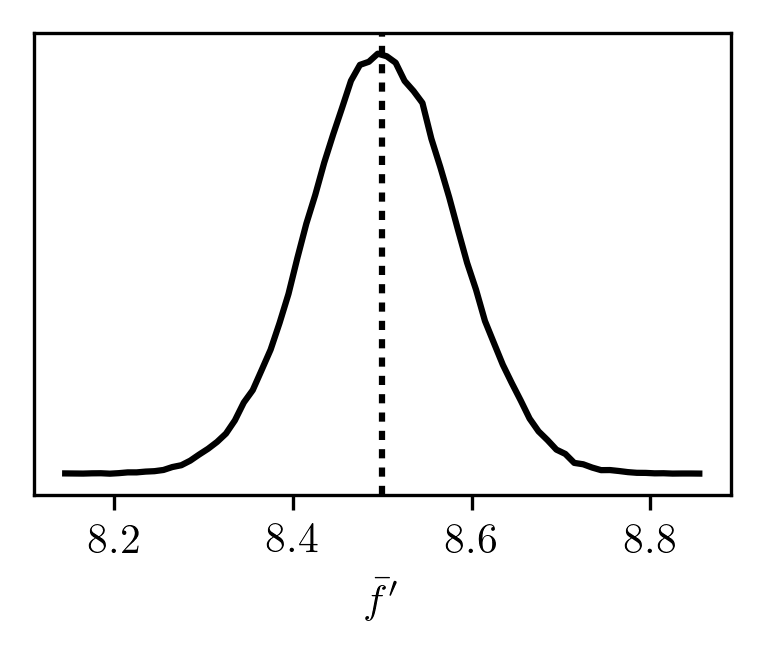

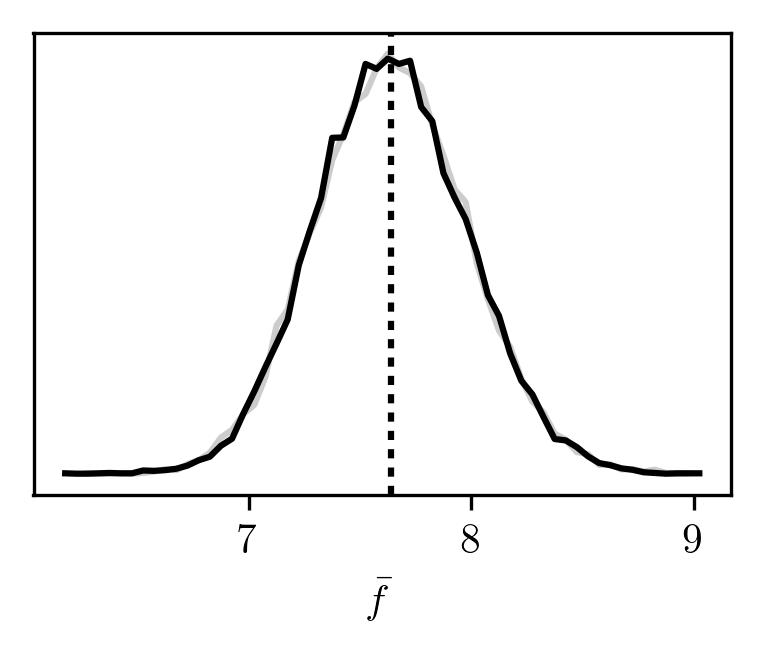

Binning the results from several trials, we obtain

As expected, this distribution looks very similar to the previous one.

As expected, this distribution looks very similar to the previous one.

From an implementation perspective, this result is incredibly trivial.

Since in practice we obtain random values from a standard Gaussian distribution (with zero mean and unit variance), sampling from a Gaussian with specific parameters amounts to a scaling and a shift:

| xs1 = 1.5 * randn(SAMPLE_SIZE) + 2.5

|

It is then clear that sampling with any other parameters and performing the described transformation is functionally identical:

| xs2 = 2.8 * randn(SAMPLE_SIZE) + (-1.2)

xs1 = (xs2 - (-1.2)) * 1.5 / 2.8 + 2.5

|

Multiple Gaussians

The case of several independent Gaussians is a straightforward generalization of the above.

In principle, multiple correlated Gaussians may always be transformed into independent Gaussians, so we may stop here.

However, it is interesting to see what the transformation for the sampled values is in terms of the original coordinates.

We now consider a distribution ϱ with mean vector μ⃗ and covariance matrix Σ, whose pdf is

ϱ(x⃗)∝exp(−21(x⃗−μ⃗)TΣ−1(x⃗−μ⃗)).

For convenience, we introduce A=Σ−1 and diagonalize it as A=STΛS.

If we have another distribution ϱ′ with parameters μ⃗′ and Σ′, then the choice

x⃗′(x⃗)=(S′)T√(Λ′)−1ΛS(x⃗−μ⃗)+μ⃗′

results in

ϱ(x⃗)∝ϱ′(x⃗′(x⃗)).

This is essentially identical to the univariate x′(x), but with the introduction of the transformation matrices S and S′ to decouple the coordinates.

As before, we have that

⟨f⟩ϱ=⟨f′⟩ϱ′,

where

f′(x⃗′)=f(x⃗(x⃗′))=f(ST√Λ−1Λ′S′(x⃗′−μ⃗′)+μ⃗).

Again, this is not surprising if we consider what is done operationally when sampling from independent standard Gaussian distributions.

We may check this result with

μ⃗=(−0.61.4),

Σ=(2113),

μ⃗′=(0.6−1.4),

Σ′=(3−1−12),

and

f(x⃗)=(x1+x2)2.

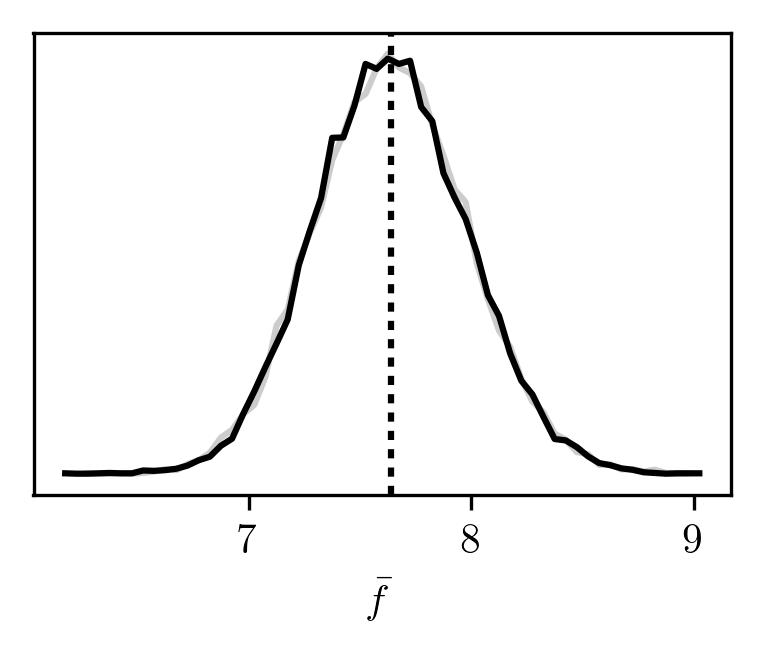

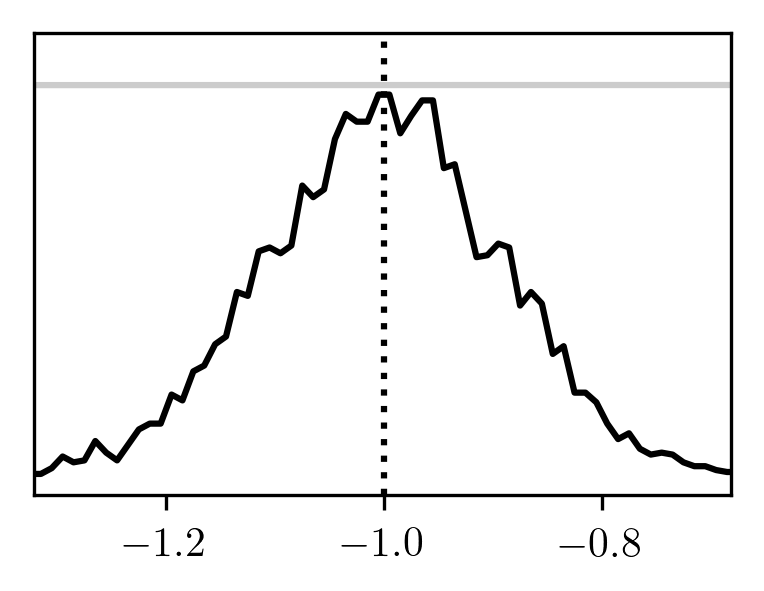

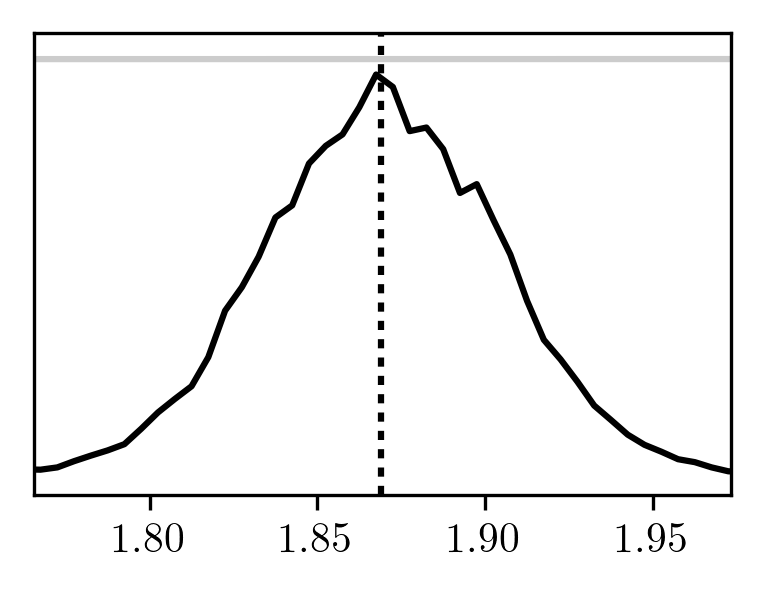

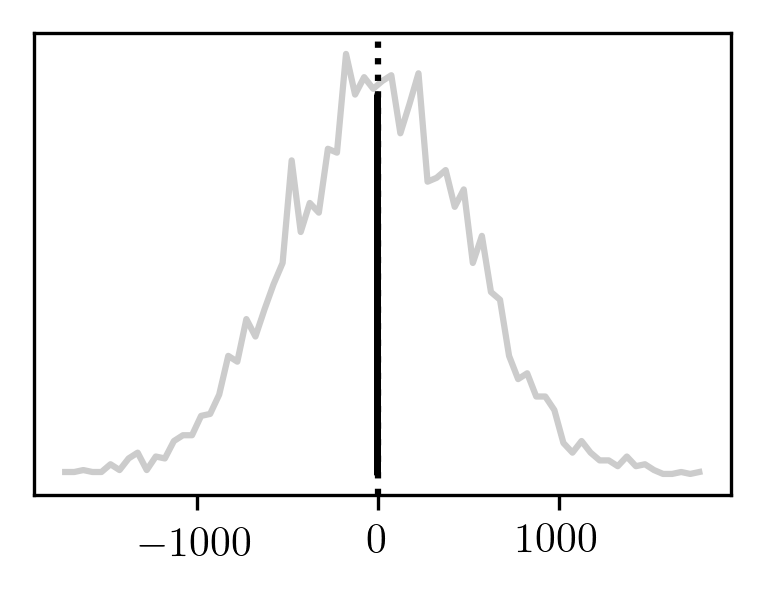

The resulting distributions are:

The grey curve is from sampling ϱ, while the black curve is from sampling ϱ′ and then transforming the sampled coordinates.

The exact result (dotted) is given by

(μ1+μ2)2+λ1(s11+s12)2+λ2(s21+s22)2=7.64.

So far, we've seen that sampling from any Gaussian is as good as any other.

The grey curve is from sampling ϱ, while the black curve is from sampling ϱ′ and then transforming the sampled coordinates.

The exact result (dotted) is given by

(μ1+μ2)2+λ1(s11+s12)2+λ2(s21+s22)2=7.64.

So far, we've seen that sampling from any Gaussian is as good as any other.

Application: Finite difference

Consider the function

F(p)=⟨f(p)⟩p=∫dxϱ(p,x)∫dxϱ(p,x)f(p,x),

where x is a scalar or a vector.

We may approximate its derivative using a finite difference formula:

dpdF≈2Δp1(F(p+Δp)−F(p−Δp)).

More elaborately, its log derivative is given by

dpdlnF≈2Δp1⋅F(p)F(p+Δp)−F(p−Δp).

This is equivalently written as

dpdlnF≈2Δp1⋅⟨f(p)⟩p⟨f(p+Δp)⟩p+Δp−⟨f(p−Δp)⟩p−Δp.

Suppose that sampling is the most expensive part of the calculation and so we only wish to sample values from a single distribution.

From the above discussion, we know that there are functions f+(x) and f−(x) such that

dpdlnF≈2Δp1⋅⟨f(p)⟩p⟨f+⟩p−⟨f−⟩p,

where the only approximation is due to the finite difference.

Specifically,

f±(x)=f(p±Δp,x±(x)),

where x±(x) are the appropriate coordinate transformations.

Hence, we may estimate this derivative by sampling from just one distribution rather than three.

For example, consider the multivariate Gaussian ϱ(p,x⃗) with

μ⃗=(−0.61.4),

and

Σ(p)=(2p113/p).

If we choose the function

f(p,x⃗)=p2(x1+x2)2,

then F(p=1)=7.64 is the value we were estimating in the previous section.

Now we will estimate

dpdlnF∣∣∣∣p=1

with Δp=10−4.

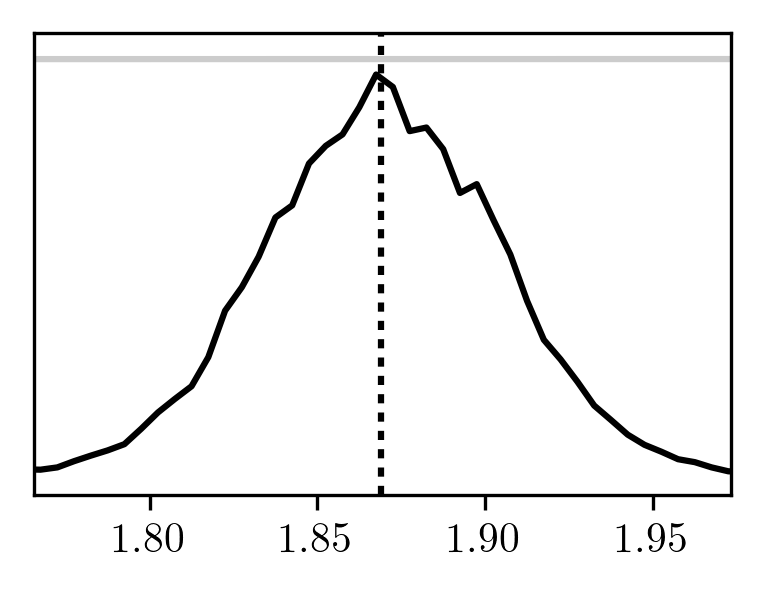

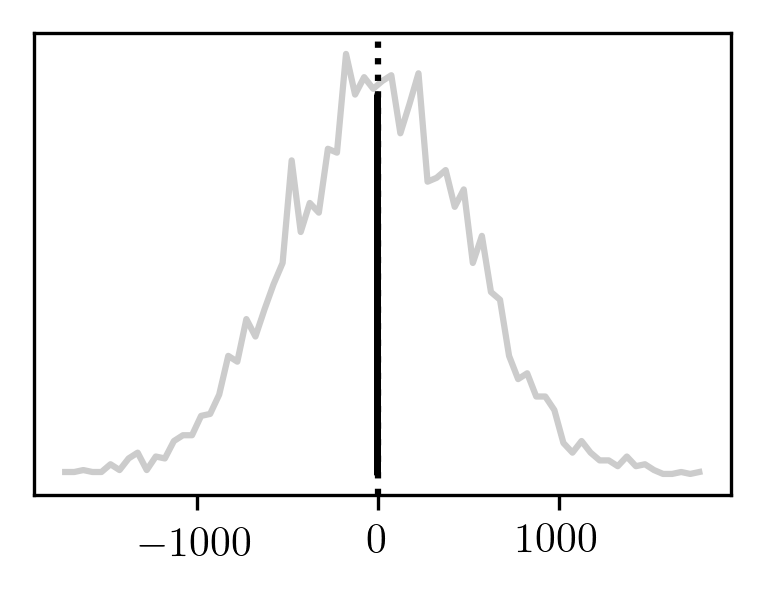

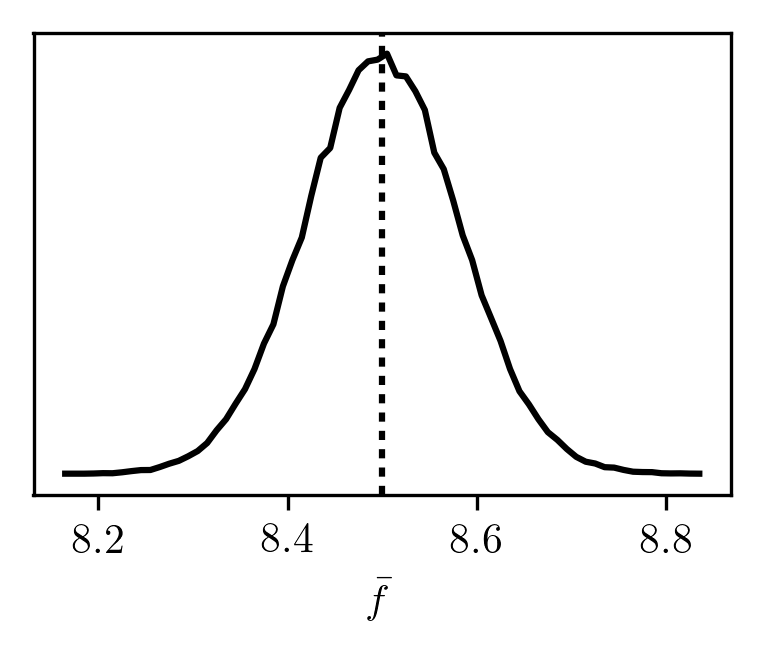

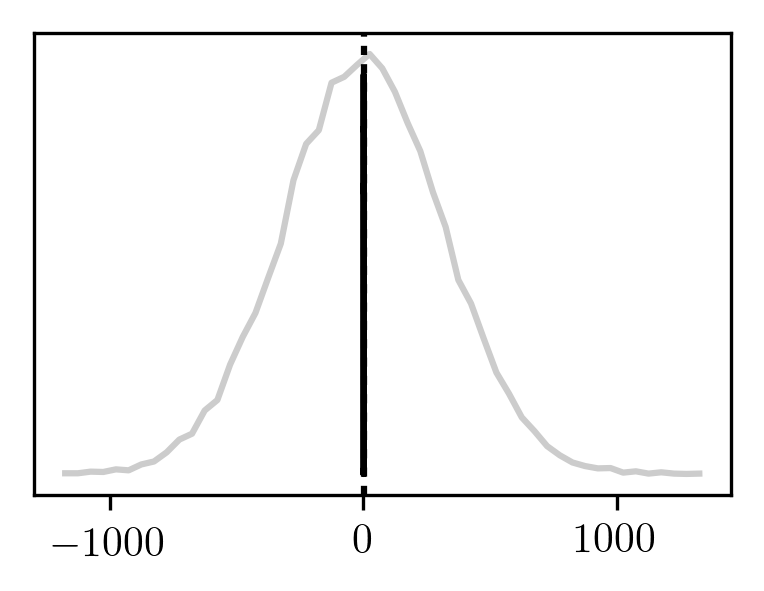

The distributions that we get are:

The black curve is generated by sampling only from ϱ(p,x⃗) (and not ϱ(p+Δp,x⃗) or ϱ(p−Δp,x⃗)).

The dotted line is found by applying the finite difference method to the exact result.

The grey curve (which looks like a horizontal line in the above plot) shows what happens if we sample from all three distributions separately.

To really appreciate the difference between the two, it helps to zoom out:

The black curve is generated by sampling only from ϱ(p,x⃗) (and not ϱ(p+Δp,x⃗) or ϱ(p−Δp,x⃗)).

The dotted line is found by applying the finite difference method to the exact result.

The grey curve (which looks like a horizontal line in the above plot) shows what happens if we sample from all three distributions separately.

To really appreciate the difference between the two, it helps to zoom out:

Surprisingly, it seems that we not only save on sampling effort using this technique, but we also significantly reduce the standard error of the mean!

This reduction might be due to a favourable cancellation of errors that is only possible when the sampled points are the same.

We may also try to evaluate the derivative of a more convoluted expression.

Starting from

F(p)=∫dxϱ(p,x)∫dxg(p,x),

we may always obtain

F(p)=⟨f(p)⟩p=∫dxϱ(p,x)∫dxϱ(p,x)f(p,x)

by setting

f(p,x)=ϱ(p,x)g(p,x).

For our example, we'll use

g(p,x⃗)=exp(−p(x12+x22))

and the same ϱ(p,x⃗) as before.

We obtain a simple form for the expression:

F(p)∝p√λ1λ2.

Because λ1λ2 is the determinant of A, which happens to be constant, we have that

dpdlnF=−p1.

Sampling the finite difference at p=1 yields

and

and

Once again, we see an improvement if we only sample once.

Once again, we see an improvement if we only sample once.

It appears that although sampling from one Gaussian and then swapping it out for a different one is formally a useless procedure, there do exist situations where it provides some benefit.

As expected, this distribution looks very similar to the previous one.

As expected, this distribution looks very similar to the previous one. The grey curve is from sampling , while the black curve is from sampling and then transforming the sampled coordinates.

The exact result (dotted) is given by

So far, we've seen that sampling from any Gaussian is as good as any other.

The grey curve is from sampling , while the black curve is from sampling and then transforming the sampled coordinates.

The exact result (dotted) is given by

So far, we've seen that sampling from any Gaussian is as good as any other. The black curve is generated by sampling only from (and not or ).

The dotted line is found by applying the finite difference method to the exact result.

The grey curve (which looks like a horizontal line in the above plot) shows what happens if we sample from all three distributions separately.

To really appreciate the difference between the two, it helps to zoom out:

The black curve is generated by sampling only from (and not or ).

The dotted line is found by applying the finite difference method to the exact result.

The grey curve (which looks like a horizontal line in the above plot) shows what happens if we sample from all three distributions separately.

To really appreciate the difference between the two, it helps to zoom out:

and

and

Once again, we see an improvement if we only sample once.

Once again, we see an improvement if we only sample once.