Simulating quantum phase estimation

2018-10-07The quantum phase estimation algorithm is an important and elegant algorithm in the field of quantum information.

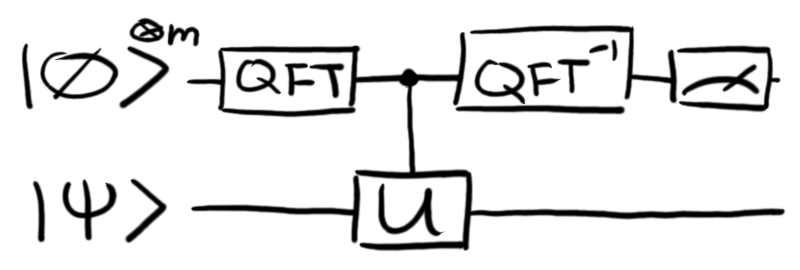

Its circuit is just

We start with a register of qubits initialized to zeros, as well as some state .

We then form a superposition state in the register using the QFT, control the gate acting on with the superposition, and try to undo the superposition with the inverse QFT.

Measuring the register should give us information about the eigenvalues of the unitary operator that the gate implements.

We start with a register of qubits initialized to zeros, as well as some state .

We then form a superposition state in the register using the QFT, control the gate acting on with the superposition, and try to undo the superposition with the inverse QFT.

Measuring the register should give us information about the eigenvalues of the unitary operator that the gate implements.

At a glance, this is a very simple algorithm, with few moving parts. Nevertheless, it has been suggested to me that there are some subtleties that float to the surface when trying to use it in practice to find the ground state of a Hamiltonian. The best way to see what issues arise is to try it out and run the circuit with a specific Hamiltonian in mind.

Analytical result

Quantum Fourier transform

First, let's see what we can expect from the circuit. Recall that the QFT takes a basis state (where is an -bit integer with bits ) and maps it to The inverse QFT does the same, but with the sign flipped on the phase: It's no surprise that A special case of the QFT is which is the even superposition of all basis states. This state can also be generated with Hadamard gates:

Controlled unitary

The controlled unitary in the circuit could use some elaboration. An arbitrary gate controlled by a single qubit is perfectly cromulent: if the control qubit is 0, the operation is not applied; if the qubit is 1, it is applied. See, for example, the beloved controlled NOT gate, which even has its own dedicated notation.

More rigorously, we could say that In block matrix/operator form, this is where we have also expressed it as a direct sum.

The meaning of having multiple control qubits is not immediately clear from the one qubit case, but we just use it as a convenient shorthand for a natural generalization. Consider the parameterization which allows us to say

We are free to define any family of unitary operators and write as the definition of a controlled gate with multiple control qubits. An obvious choice for this family is the one we made above, but with a larger dynamic range: Then This allows us to repeat a different number of times depending on the values of the control register. Crucially, by placing the control register in a superposition of all its values, we obtain a superposition of all the corresponding repeated applications of the gate !

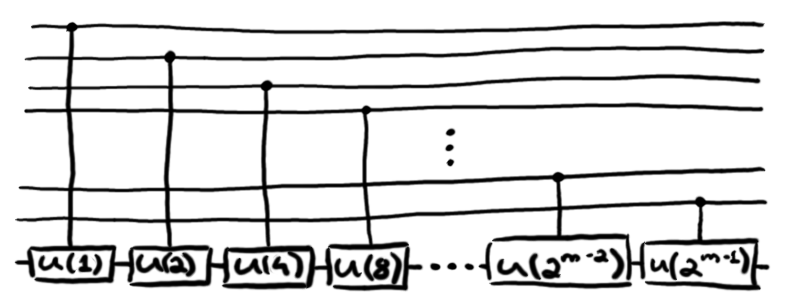

A useful observation is that so can be decomposed into a product over the bits of . Since each bit in the control register gets to control its own unitary:

This expression may seem like a big leap, but it follows directly from the observations that and which lead to

Thus, if we wanted to be more explicit, we could have drawn the controlled unitary in the original circuit as

but this would have made it harder to justify our use of the word "elegant".

but this would have made it harder to justify our use of the word "elegant".

Time evolution

Behind every unitary operator is a Hamiltonian; that is, for every unitary , we may write where is a Hermitian operator with units of energy, has units of time, and is . Because at the end of the day we are interested in finding the eigenvalues of a Hamiltonian, we will adopt this picture, where we can think of as being the propagator for time evolution under the influence of the Hamiltonian for a duration .

We note that the eigenvectors of and are the same, and for every eigenvalue of , the corresponding eigenvalue of is . We also note that our sign convention corresponds to backwards time evolution, but this makes the math more convenient later on; the sign convention on the QFT is ultimately to blame.

In line with the above reasoning, we can think about repeating this time evolution times, where is an -bit integer.

We can define

as just slightly more than the maximum possible evolution time.

For and a unitary for an arbitrary gate , this can be illustrated as follows:

Application of the circuit

The initial state is and we've already seen that the QFT transforms this to The controlled unitary then results in Inserting a complete set of eigenstates of , we write this as where is the overlap of our initial state with the th eigenstate of the Hamiltonian.

Finally, the inverse QFT gives us If were exactly an -bit integer, this would leave us with We could then measure the qubit register, multiply the result by , and have exactly the th eigenenergy of with probability . If we could prepare the system in an eigenstate of , then we would always measure the energy of that eigenstate.

Alas, that's not possible (unless we have some insider information and can tune appropriately), so we instead express the energy as where is the nearest integer to and is the remaining fractional part. Then it follows that the final state may be written as

When we measure the control register, the probability of obtaining a given is

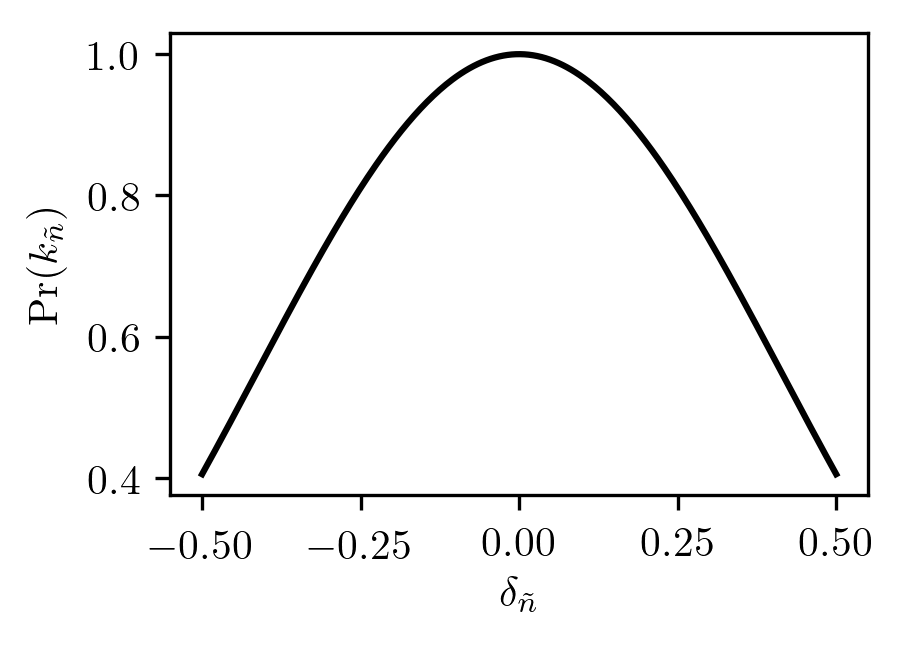

For simplicity, let's suppose that the system was initialized in the eigenstate (so ); then the probability is just

When (i.e. we have the desired outcome of a measurement consistent with the eigenenergy), this simplifies further to

For , this function looks like

It's similar for other ; the overall idea is that we are more likely to get the right answer when is smaller.

Surprisingly, for large , the probability can be quite low, even though we started with the system already in an eigenstate.

As we'll see later, this isn't a cause for concern because this is just a consequence of adjacent bins sharing the probability.

It's similar for other ; the overall idea is that we are more likely to get the right answer when is smaller.

Surprisingly, for large , the probability can be quite low, even though we started with the system already in an eigenstate.

As we'll see later, this isn't a cause for concern because this is just a consequence of adjacent bins sharing the probability.

If we don't suppose that we started from a single eigenstate, then there are several eigenvalues that could share the same . Therefore a given could match more than one eigenstate and things get more complicated. Let's not go there just yet.

Wait, hold on, what's this about modular arithmetic?

Given an eigenvalue of the Hamiltonian , we can trivially find the corresponding eigenvalue of . However, because the complex logarithm is multivalued, the inverse procedure (to find the phase of ) can only get us the fractional part: for any we pick to be our answer, every element of the set is also a valid answer. It's no surprise that this multivaluedness is an issue for us, since the algorithm in question is specifically for finding eigenvalues of unitary operators, and we're doing our best to apply it to Hermitian operators.

Suppose, for example, that and . Then Here are some hypothetical eigenvalues of with the corresponding values in the algorithm:

| () | |||||

| () | |||||

| () | |||||

| () | |||||

| () | |||||

| () | |||||

| () | |||||

| () | |||||

| () | |||||

| () |

Naturally, we can only resolve 16 different outcomes in this example, and each one can represent infinitely many eigenvalues. Repeats in this table are marked with arcane symbols.

In general, measuring a particular value (assuming that we are lucky and the measurement reflects the desired state in the first place) implies that there is an eigenvalue within

of

for some integer .

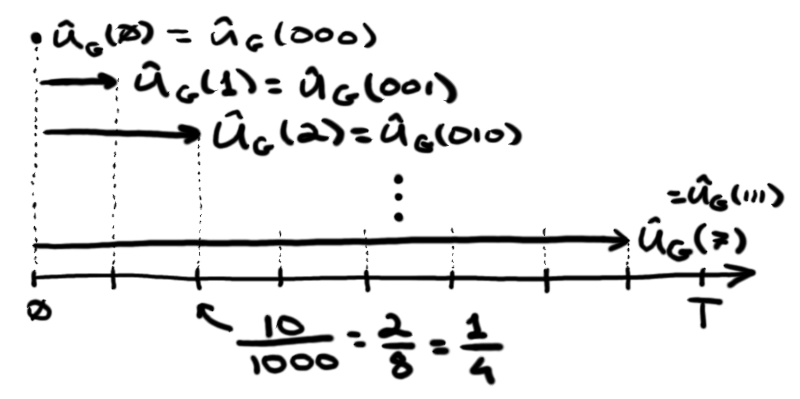

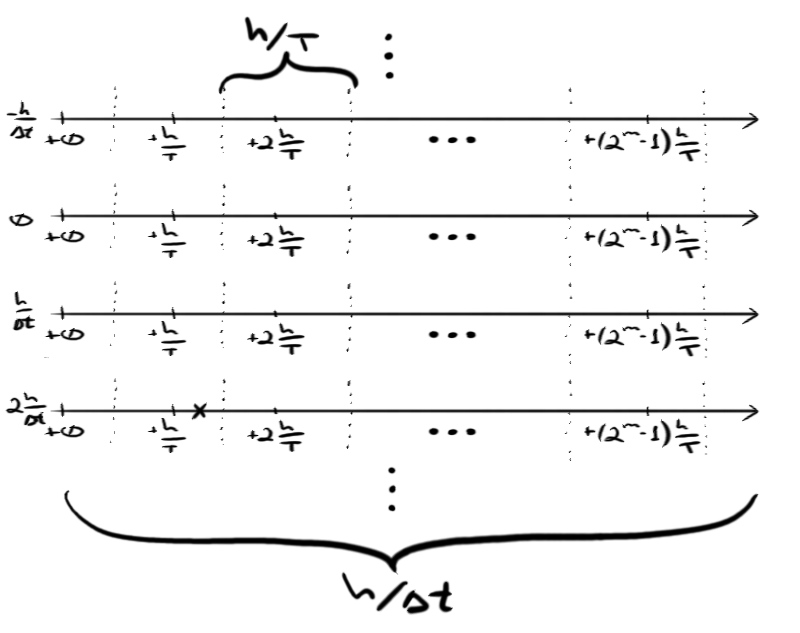

This partitions the real numbers into a lattice of intervals of length indexed by and .

That is, every real number can be identified with the tuple and written as

where is an -bit integer, is any old integer, and .

Schematically, we can draw the real number line split into infinitely many intervals of length :

The point marked by the cross is at approximately .

The point marked by the cross is at approximately .

In this example, so could mean a band of values (of width ) around , , , and so on. We could increase in order to shrink the size of each repeated segment or increase in order to grow the dynamic range within a repeated segment; both of these increase and give us a tighter band around each value.

Simulated result

With all that in mind, let's set up some simulations of the circuit. We'll try it in a couple of different ways to get a better sense of how this could work in practice.

Harmonic oscillators

To warm up, let's attempt a simple black box calculation, where we just write the circuit in terms of exact unitary matrices that do all the work for us. Consider a pair of coupled harmonic oscillators with the Hamiltonian A bit of grunt work (with help from Appendix C of my MSc thesis) reveals that when , the ground state energy of this Hamiltonian is given by We'll set so that the coupling is not too strong, and we'll be expecting an energy around .

In the tensor product basis of the individual oscillators, the matrix elements are and we can use them to construct the Hamiltonian in a finite basis:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 | julia> using LinearAlgebra

julia> eye(n) = Matrix{Float64}(I, n, n);

julia> q(n) = Symmetric(diagm(1 => sqrt.(1.0:(n-1))))/sqrt(2);

julia> function H(n)

result = kron(diagm(0 => 0.0:(n-1)) + 0.5I, eye(n))

result .+= kron(eye(n), diagm(0 => 0.0:(n-1)) + 0.5I)

result .+= 0.1 * kron(q(n), q(n))

result

end;

julia> H(3)

9×9 Array{Float64,2}:

1.0 0.0 0.0 0.0 0.05 0.0 0.0 0.0 0.0

0.0 2.0 0.0 0.05 0.0 0.0707107 0.0 0.0 0.0

0.0 0.0 3.0 0.0 0.0707107 0.0 0.0 0.0 0.0

0.0 0.05 0.0 2.0 0.0 0.0 0.0 0.0707107 0.0

0.05 0.0 0.0707107 0.0 3.0 0.0 0.0707107 0.0 0.1

0.0 0.0707107 0.0 0.0 0.0 4.0 0.0 0.1 0.0

0.0 0.0 0.0 0.0 0.0707107 0.0 3.0 0.0 0.0

0.0 0.0 0.0 0.0707107 0.0 0.1 0.0 4.0 0.0

0.0 0.0 0.0 0.0 0.1 0.0 0.0 0.0 5.0

julia> eigvals(H(3))[1]

0.9987460864290645

julia> eigvals(H(10))[1]

0.998746073110322

julia> eigvals(H(20))[1]

0.998746073110361

|

We only need about 10 basis functions per oscillator to get a good ground state energy, and as expected it's only slightly perturbed by the coupling.

Since we don't have any specific units of energy in mind, we'll set and measure times in units of reciprocal energy. To get the ground state energy close to the middle of the bin, we'll need to have .

The QFT is straightforward:

1 2 3 4 5 6 7 8 | julia> outer(v) = v * v';

julia> qft(m) = exp.(2pi*im*outer(0:(2^m-1))/2^m)/sqrt(2^m);

julia> round.(qft(2); digits=3)

4×4 Array{Complex{Float64},2}:

0.5+0.0im 0.5+0.0im 0.5+0.0im 0.5+0.0im

0.5+0.0im 0.0+0.5im -0.5+0.0im -0.0-0.5im

0.5+0.0im -0.5+0.0im 0.5-0.0im -0.5+0.0im

0.5+0.0im -0.0-0.5im -0.5+0.0im 0.0+0.5im

|

The controlled unitary is nearly as straightforward:

1 2 3 | julia> oneat(m, j) = (A = zeros(2^m); A[j] = 1; A);

julia> U(n, dt) = exp(2pi*im*H(n)*dt);

julia> CU(m, n, dt) = sum(kron(diagm(0 => oneat(m, j)), U(n, dt*(j-1))) for j in 1:2^m);

|

Note the extra factor of because we've set , not .

The easiest thing to do is to start from the exact ground state of the coupled harmonic oscillators:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 | julia> using Qutilities

julia> function circuit(m, n, dt, v)

state = kron(oneat(m, 1), v)

state = kron(qft(m), eye(n^2)) * state

state = CU(m, n, dt) * state

state = kron(qft(m)', eye(n^2)) * state

state

end;

julia> probs(m, n, state) = real(diag(ptrace(state*state', (2^m, n^2), 2)));

julia> m = 3;

julia> n = 10;

julia> dt = 0.12;

julia> v = eigvecs(H(n))[:, 1];

julia> @time result = circuit(m, n, dt, v);

0.267273 seconds (36.49 k allocations: 208.943 MiB, 52.51% gc time)

julia> probs(m, n, result)

8-element Array{Float64,1}:

0.0019258161476215308

0.9945138775769432

0.00164707690823041

0.0005043909090011376

0.0003010558351650097

0.00026042359597120573

0.00030923686002463913

0.0005381221670408925

|

To do the partial trace over the harmonic oscillators and obtain outcome probabilities for measuring the control register, I'm using the partial trace implementation from the Qutilities package. We've chosen to use 3 qubits for the time being, giving us 8 possible outputs. The probability is clustered around , which means that our estimate for the answer is

1 2 | julia> 1/(2^m * dt)

1.0416666666666667

|

which is indeed less than

1 2 | julia> 0.5/(2^m * dt)

0.5208333333333334

|

away from the correct answer. It's actually only

1 2 | julia> 1/(2^m * dt) - eigvals(H(n))[1]

0.0429205935563447

|

away from the correct answer, which is why our probabilities were so sharp.

Consider instead

1 2 3 4 5 6 7 8 9 10 11 12 13 | julia> dt = 0.0625784688247742;

julia> @time result = circuit(m, n, dt, v);

0.249420 seconds (36.47 k allocations: 207.874 MiB, 57.87% gc time)

julia> probs(m, n, result)

8-element Array{Float64,1}:

0.41053347451699485

0.4105334745170109

0.050622325138180865

0.02260097956518265

0.01624322077963387

0.016243220779633978

0.022600979565182543

0.05062232513818

|

The only change was in the duration, so that the eigenvalue falls directly between the first two bins, and we do see that the bulk of the probability is split evenly between them. It's no coincidence that

1 2 | julia> abs2((1-exp(2pi*im*0.5))/(1-exp(2pi*im*0.5/2^m)))/2^(2m)

0.41053347451700284

|

If we increase without changing , we can better resolve the energy:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | julia> m = 6;

julia> n = 10;

julia> dt = 0.12;

julia> @time result = circuit(m, n, dt, v);

35.094506 seconds (292.70 k allocations: 79.125 GiB, 10.46% gc time)

julia> probs(m, n, result)[6:12]

7-element Array{Float64,1}:

0.010572912417752508

0.026927549529518778

0.1668693082196445

0.6899738964388513

0.042462317880069926

0.013872943502266583

0.006822244464881193

|

(This is two orders of magnitude slower.) We see that the majority of the probability is in bin 8, with a little bit in bin 7. This is reasonable, as the true energy is closer to

1 2 | julia> 8/(2^m * dt)

1.0416666666666667

|

than to

1 2 | julia> 7/(2^m * dt)

0.9114583333333334

|

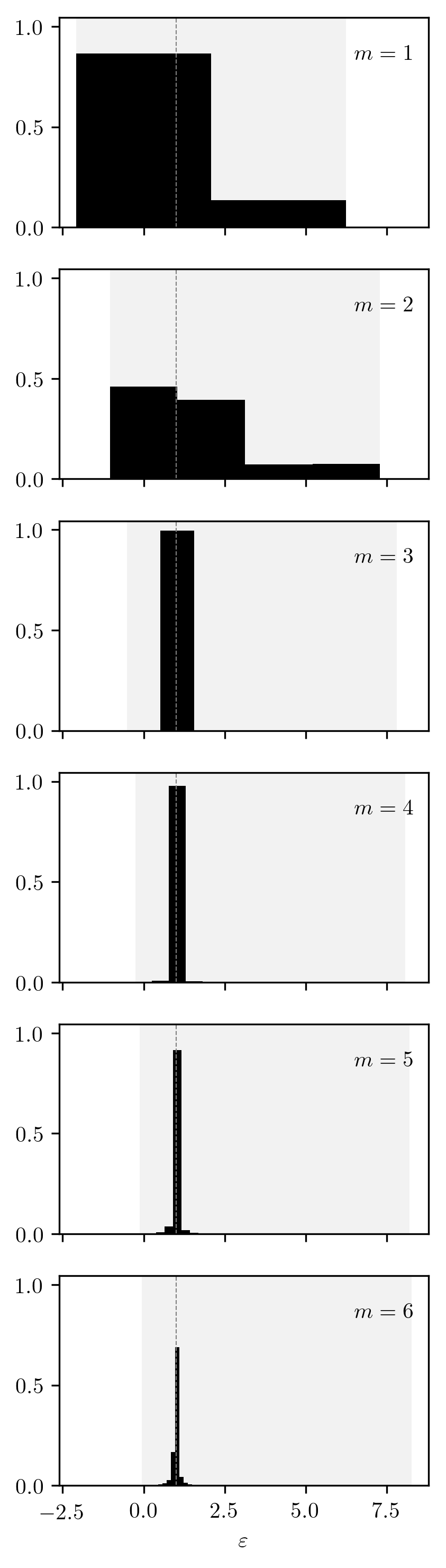

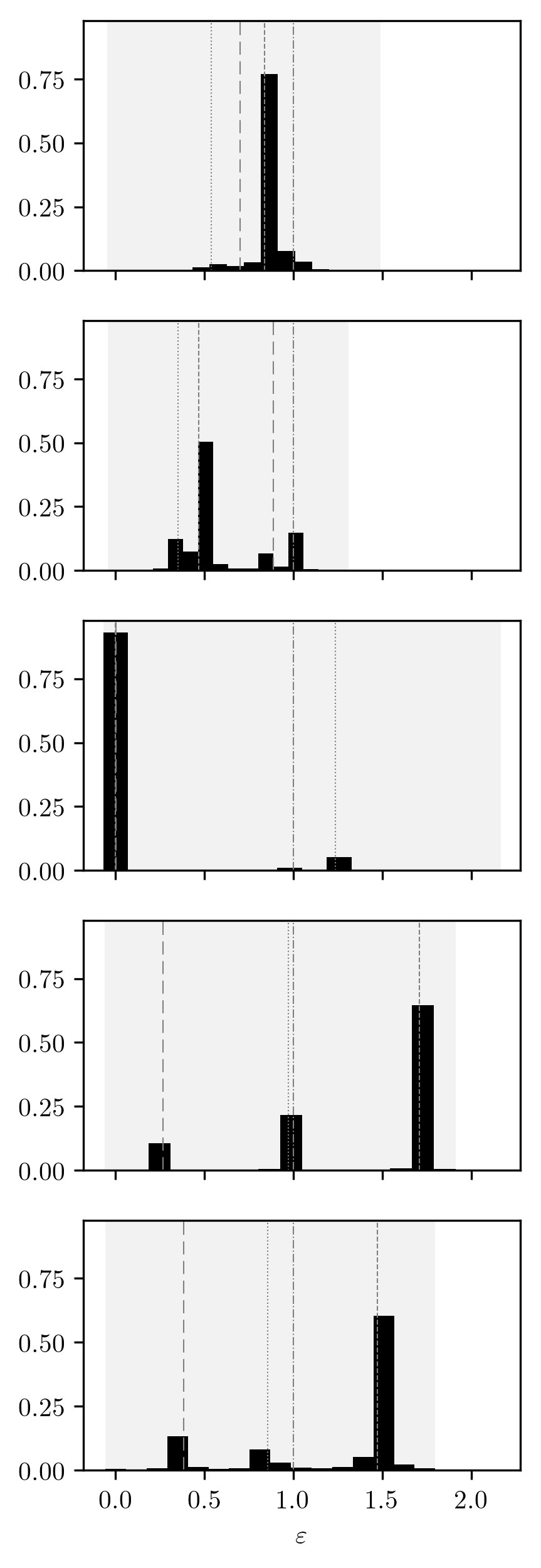

Here's what the probability distribution looks like for a variety of values:

Because we're keeping constant at , the range of doesn't change, but each bin gets smaller and the negative region shrinks.

Because we're keeping constant at , the range of doesn't change, but each bin gets smaller and the negative region shrinks.

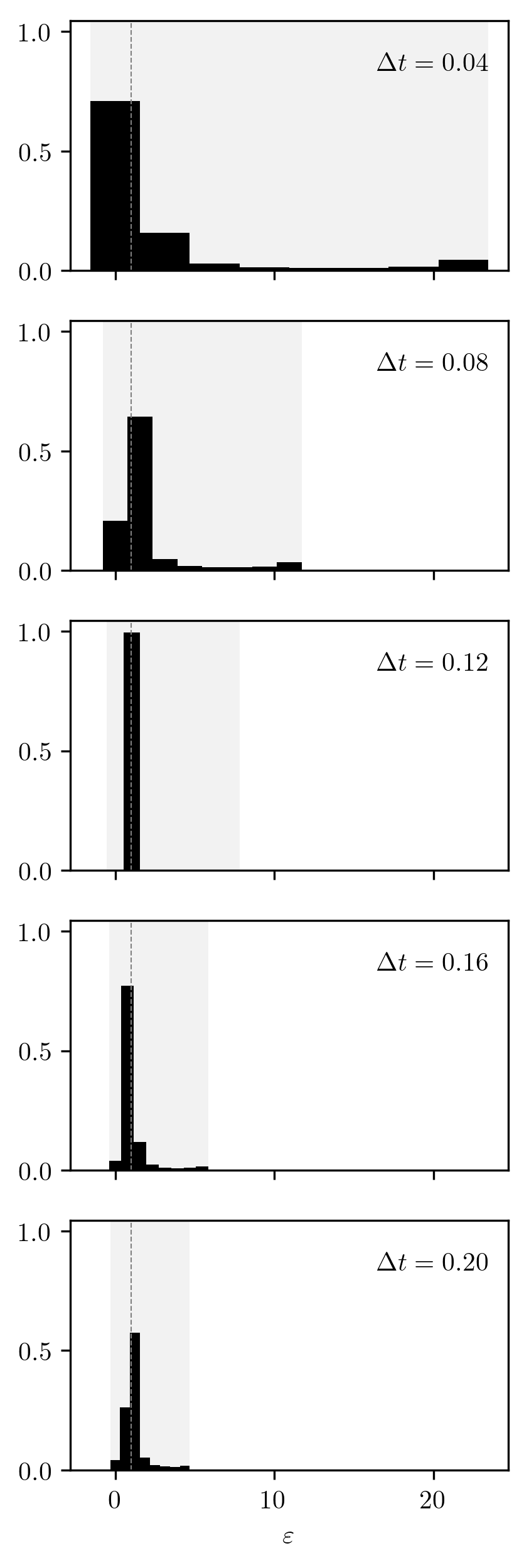

We can also keep constant at and increase (and ):

The long and the short of it is that this seems to always work reasonably well, but it works best when the duration is tuned correctly.

The long and the short of it is that this seems to always work reasonably well, but it works best when the duration is tuned correctly.

The energy of the first excited state is

1 2 3 4 | julia> eigvals(H(10))[2]

1.947429371160866

julia> eigvals(H(20))[2]

1.9474293711608688

|

We can also correctly predict it:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | julia> m = 3;

julia> n = 10;

julia> dt = 0.12;

julia> v = eigvecs(H(n))[:, 2];

julia> @time result = circuit(m, n, dt, v);

0.124010 seconds (36.49 k allocations: 208.943 MiB, 3.94% gc time)

julia> probs(m, n, result)

8-element Array{Float64,1}:

0.005527928092659891

0.02212701112663486

0.9460673221302545

0.013450918545547691

0.004501970310266165

0.0027946158277947124

0.002487762931857694

0.0030424710349814745

|

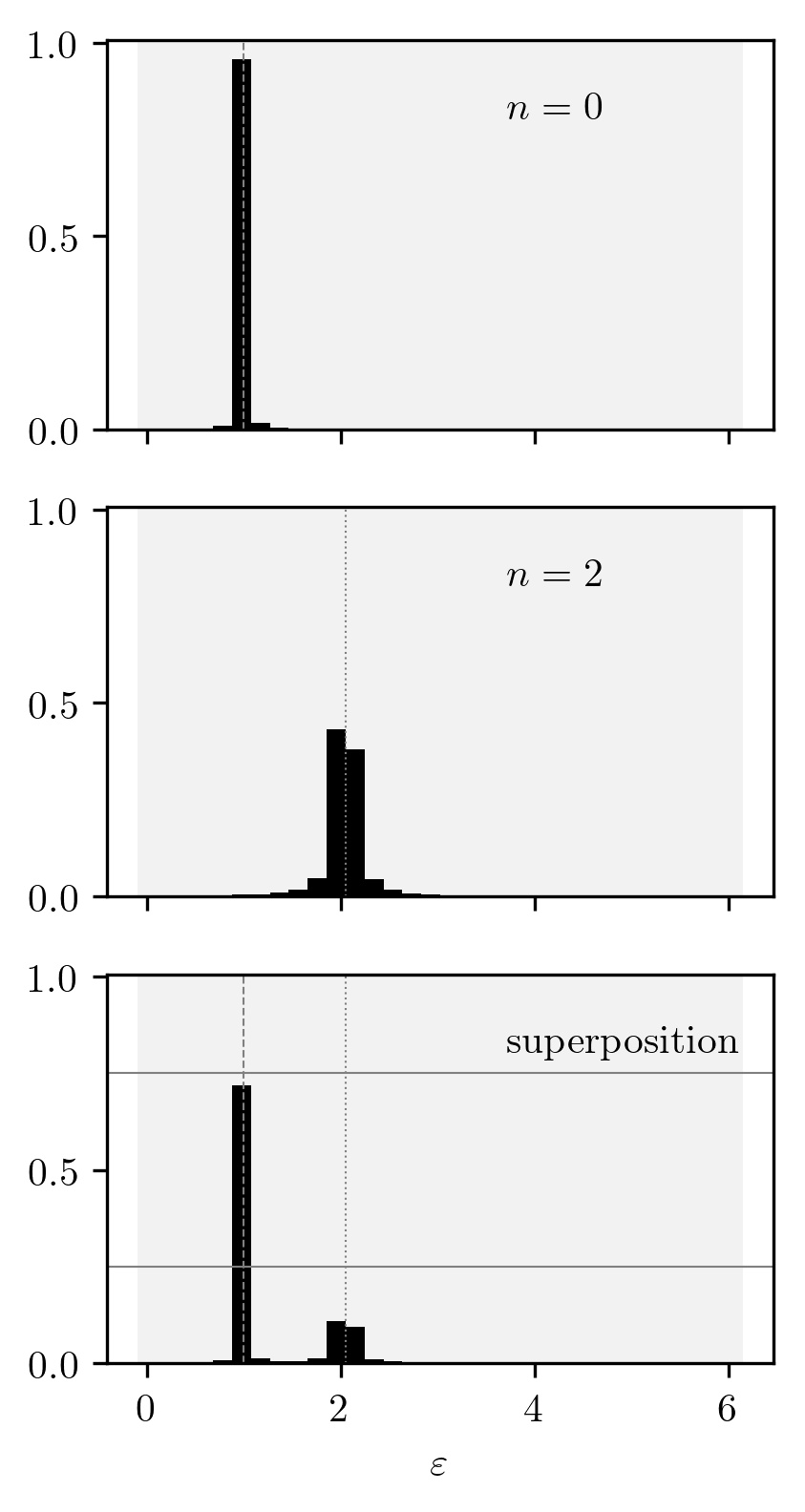

Finally, here's an example (with and ) of the two eigenstates and , as well as the superposition

The horizontal lines are at and .

If we start with a superposition of eigenstates, we seem to obtain the weighted sum of the probability distributions.

The horizontal lines are at and .

If we start with a superposition of eigenstates, we seem to obtain the weighted sum of the probability distributions.

This example highlights the fact that the SUT doesn't need to be expressed in terms of qubits: the input state can in principle be whatever state you need it to be. Although the control register is expressed in the basis of two-level systems, the remaining subsystem in this example is a genuine many-body quantum system. Of course, we must represent it by truncating its basis for practical reasons, but if we could implement a controlled unitary for this algorithm in the lab, there is no conceptual reason why it would need to act on a finite Hilbert space.

Transverse field Ising model

To appeal more to the spin-minded, we also look at the critical transverse field Ising model. The Hamiltonian for spins is and the formula gives us a ground state energy of . Finally, a negative energy to really play with the modular wraparound!

Recall that and This means that (starting over in a fresh session) the Hamiltonian looks like

1 2 3 4 5 6 7 8 | julia> H = -kron([1 0; 0 -1], [1 0; 0 -1]);

julia> H += -kron([0 1; 1 0], [1 0; 0 1]);

julia> H += -kron([1 0; 0 1], [0 1; 1 0])

4×4 Array{Int64,2}:

-1 -1 -1 0

-1 1 0 -1

-1 0 1 -1

0 -1 -1 -1

|

Because it's a bit circular to try to find an eigenvalue of a Hamiltonian by first exactly exponentiating it (which requires one to know all its eigenvalues), we will be more honest this time. We will also split the register-controlled unitary into several qubit-controlled unitaries for purposes of realism. The QFT is annoying to express in terms of more primitive gates, so we'll forgo that.

Since we're going to break the controlled unitary up into little pieces, we're going to need to evaluate for (again having set and using the appropriate units of time). To avoid diagonalizing , we will Trotterize the exponential: where Sadly, this gives us one more parameter to tweak, but this is a tolerable burden.

Speaking of parameters, if we pick and , we should get the ground state somewhere close to the middle of bin 5. Specifically,

1 2 3 4 | julia> m = 3;

julia> dt = 0.17;

julia> 5/(2^m * dt) - 1/dt

-2.205882352941176

|

which is off by only

1 2 3 | julia> using LinearAlgebra

julia> 5/(2^m * dt) - 1/dt - eigvals(H)[1]

0.030185624558613622

|

compared to the bin width

1 2 | julia> 1/(2^m * dt)

0.7352941176470588

|

Trivially, the matrix representation of is Nearly as trivially, the matrix representation of is

Now that we have all these pieces, we can code them up:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 | julia> eye(n) = Matrix{Float64}(I, n, n);

julia> U_sigma_z(tau) = diagm(0 => exp.(2pi*im*[-1, 1, 1, -1]*tau));

julia> round.(U_sigma_z(0.25); digits=3)

4×4 Array{Complex{Float64},2}:

0.0-1.0im 0.0+0.0im 0.0+0.0im 0.0+0.0im

0.0+0.0im 0.0+1.0im 0.0+0.0im 0.0+0.0im

0.0+0.0im 0.0+0.0im 0.0+1.0im 0.0+0.0im

0.0+0.0im 0.0+0.0im 0.0+0.0im 0.0-1.0im

julia> U_sigma_x(tau) = [cos(2pi*tau) -im*sin(2pi*tau); -im*sin(2pi*tau) cos(2pi*tau)];

julia> round.(U_sigma_x(0.25); digits=3)

2×2 Array{Complex{Float64},2}:

0.0+0.0im 0.0-1.0im

0.0-1.0im 0.0+0.0im

julia> outer(v) = v * v';

julia> qft(m) = exp.(2pi*im*outer(0:(2^m-1))/2^m)/sqrt(2^m);

julia> oneat(m, j) = (A = zeros(2^m); A[j] = 1; A);

julia> function CU(m, j, tau)

result = zeros(ComplexF64, 2^m*4, 2^m*4)

for k in 1:2^m

if (k-1) & (1<<(j-1)) == 0

result .+= kron(diagm(0 => oneat(m, k)), eye(2), eye(2))

else

X = kron(diagm(0 => oneat(m, k)), U_sigma_z(tau))

X *= kron(diagm(0 => oneat(m, k)), U_sigma_x(tau), eye(2))

X *= kron(diagm(0 => oneat(m, k)), eye(2), U_sigma_x(tau))

result .+= X

end

end

result

end;

julia> using Qutilities

julia> function circuit(m, dt, P0, v)

state = kron(oneat(m, 1), v)

state = kron(qft(m), eye(4)) * state

for j in 1:m

for _ in 1:(P0*2^(j-1))

state = CU(m, j, dt/P0) * state

end

end

state = kron(qft(m)', eye(4)) * state

state

end;

julia> probs(m, state) = real(diag(ptrace(state*state', (2^m, 4), 2)));

julia> v = eigvecs(H)[:, 1];

julia> for P0 in [1, 2, 5, 10, 100, 1000]

println(P0)

@time result = circuit(m, dt, P0, v);

println(probs(m, result)[6])

end;

1

0.002621 seconds (1.86 k allocations: 2.976 MiB)

0.10734424166614077

2

0.005387 seconds (3.70 k allocations: 5.908 MiB)

0.9075674144312382

5

0.015230 seconds (9.20 k allocations: 14.703 MiB, 10.64% gc time)

0.9890106048450764

10

0.027400 seconds (18.37 k allocations: 29.361 MiB, 5.04% gc time)

0.9934267922822306

100

0.257648 seconds (183.43 k allocations: 293.210 MiB, 4.03% gc time)

0.9945435690246536

1000

2.541286 seconds (1.83 M allocations: 2.863 GiB, 4.22% gc time)

0.9945539075921477

|

It appears that we get quite good results with just 10 Trotter factors, although we are able to improve the probabilities beyond that with rapidly diminishing results.

Despite the realism achieved in CU by having a separate controlled gate for each term of the Hamiltonian, I must admit that I've still cheated for all of the results, by choosing not only an appropriate duration, but also the exact ground state as the starting state.

Trying random values for these quantities should still allow us to spot all four eigenvalues of this Hamiltonian.

For reference, they are

1 2 3 4 5 6 | julia> eigvals(H)

4-element Array{Float64,1}:

-2.23606797749979

-0.9999999999999998

1.0

2.2360679774997885

|

Here are some random attempts with and :

The peaks are unpredictably distributed, but always in line with the eigenvalues!

Note how the exact eigenvalues jump around between attempts because the varying total duration changes the energy range.

The peaks are unpredictably distributed, but always in line with the eigenvalues!

Note how the exact eigenvalues jump around between attempts because the varying total duration changes the energy range.

In conclusion, I think it's safe to claim that the algorithm works extremely well in practice for its intended usage (finding the eigenvalue of a known eigenvector of a unitary operator), but it can also be applied to extract some eigenvalue information out of a Hermitian operator.

Before we part ways, I want to show you one last example.

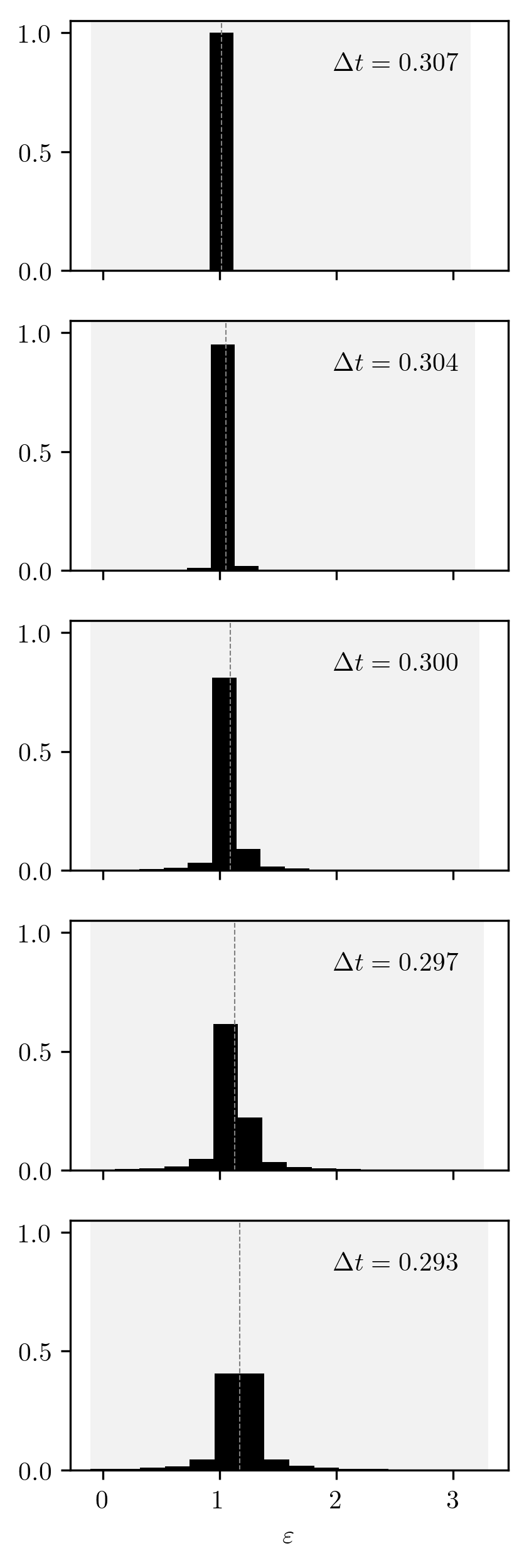

The following plots show the change in the distribution as is gradually changed so that the target eigenvalue moves from the exact middle of a bin to the very edge of that bin:

The distribution gradually melts, but not all the way into a puddle.

The distribution gradually melts, but not all the way into a puddle.